The Art of Forgetting: How AI Learns to Understand Rather Than Memorize

Consider a high school student preparing for a math test. The teacher provides practice problems: "If John has 5 apples and gives 2 to Mary, how many does he have left?" The student diligently memorizes: "When John has 5 apples and gives 2 to Mary, the answer is 3." Then on the test, they encounter: "If Sarah has 7 oranges and gives 4 to Tom, how many does she have left?" Despite the identical mathematical principle, they struggle because they've memorized specific scenarios rather than understanding the underlying concept of subtraction.

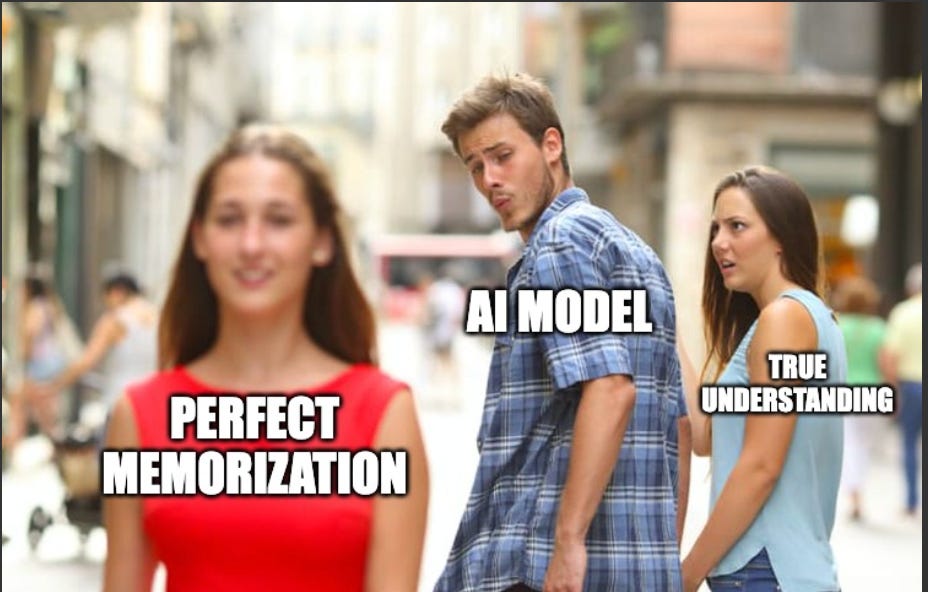

If an AI did what the highschooler did then would call it overfitting in the AI lingo - a model becomes so focused on memorizing its training examples that it fails to grasp the general principles needed to solve new, similar problems. Just like our student who memorized specific math problems instead of understanding subtraction itself, an AI model can fall into the trap of remembering individual examples rather than learning the underlying patterns that make it truly intelligent.

Beyond Pattern Matching

In a more critical scenario like training a self-driving car to recognize stop signs using only pictures taken on sunny days. The AI might learn to associate "red octagon with bright illumination" with "stop sign," but fail completely when encountering a stop sign on a rainy day or at dusk. It has overfitted to the specific lighting conditions in its training data, rather than learning the more general concept of what makes a stop sign recognizable in any condition.

This tension between memorization and understanding isn't new or limited to AI. Richard Feynman, the nobel prize winning physicist known for his uncanny ability to explain complex concepts, shared a profound lesson from his childhood that perfectly captures this distinction. In his autobiography, he recounts:

"My father would take me for walks to translate names of birds. He'd say, 'See that bird? It's a Spencer's warbler.' I learned later he made that name up because he didn't know what it really was - but he said, 'In Portuguese it's a Bom da Peida, in Chinese it's a Chung-long-tah, and in Japanese it's a Katano Tekeda.' I learned all these different names for that bird in different languages. Then he said, 'Now, you know the name of that bird in all these languages, but when you're finished, you'll know absolutely nothing whatever about the bird. You only know about humans in different places and what they call the bird. Now, let's look at the bird.'"

This deceptively simple story cuts to the heart of the issue at hand, just as knowing multiple names for a bird doesn't tell you about its behavior, habitat, or characteristics, an AI system that merely memorizes labels without understanding context and relationships hasn't truly learned.

The Art of Controlled Forgetting

To combat this challenge, there are an arsenal of techniques collectively known as regularization. Think of these as the difference between cramming for an exam and developing true expertise.

The Marie Kondo Method (L1 Regularization): L1 regularization assists AI models in identifying and retaining the most impactful features. This is analogous to decluttering a closet and keeping only the key pieces that work in multiple situations. This approach helps models focus on essential patterns rather than memorizing every minor detail.

The Power Outage Practice (Dropout): Consider practicing basketball in varying conditions - sometimes in bright sunlight, sometimes in dim light. This forces the player to develop robust skills rather than relying on perfect conditions. Dropout works similarly in AI, randomly deactivating different parts of the network during training to ensure no single pathway becomes a crutch.

The Marathon Runner's Wisdom (Early Stopping): Any athlete knows that overtraining can be as harmful as undertraining. Early stopping in AI training works like a coach who knows exactly when to say "enough" - before the model starts memorizing noise instead of learning patterns.

The Method Actor's Approach (Data Augmentation): A skilled actor doesn't merely memorize lines but learns to embody a character in any situation, data augmentation helps AI models understand concepts from multiple angles. By showing the same data in different contexts - rotating images, rephrasing sentences - we help the model grasp underlying patterns rather than surface details.

The world of regularization extends far beyond the four listed here. Like a skilled athlete who must adapt their training to different terrains and conditions, the choice of regularization methods depends intimately on both the nature of the data and the specific task at hand. In computer vision, one might emphasize techniques that preserve spatial relationships, while natural language processing often requires methods that maintain semantic coherence. These aren't just techniques to prevent memorization - they're guidelines for teaching our models to understand the inherent structure of the problems they face.

Biological Learning Systems

Regularization techniques like dropout and data augmentation help models grasp underlying patterns rather than fixating on surface details. But nowhere does regularization prove more crucial than when we move from the realm of language and computer vision to decoding the language of life itself, where the difference between memorization and true understanding can have profound implications for human health.

Consider how large language models have learned to understand both English and Hindi effectively. When GPT encounters the word "chaiwala" in an Indian context, it understands not just the literal translation "tea seller," but the rich cultural implications and variations across regions - a feat made possible by careful regularization that prevents the model from overfitting to any single cultural context. This same challenge appears in biological AI: understanding how genes "speak" differently across diverse human populations while conveying the same fundamental biological instructions requires sophisticated regularization strategies to prevent models from becoming overly specialized.

Yet biological systems offer unique advantages that make regularization particularly effective. Unlike languages, which have evolved through countless cultural accidents, biological systems are built on fundamental physical and chemical principles that remain constant across populations. When a cell needs to respond to stress, whether it's in a person from Sweden or Singapore, it will activate similar protective pathways. These natural constraints act as a kind of built-in regularization, guiding our models toward meaningful biological patterns rather than superficial correlations.

This systems-level organization transforms how regularization operates in biological AI. Where language models must rely heavily on dropout and L1/L2 regularization to avoid getting lost in the arbitrary wilderness of human expression - why does "raining cats and dogs" mean heavy rain? - biological systems offer physical principles as additional regularizing constraints. The "culture" of biology follows the universal laws of physics and chemistry, with proteins folding and metabolic pathways functioning like consistent syntax rules whether you're studying cells in Tokyo or Toronto. These natural constraints act as a kind of built-in regularization, guiding our models toward meaningful biological patterns rather than superficial correlations.

Modern deep learning architectures leverage this biological structure through carefully regularized hierarchical understanding. At their foundation, dropout helps them identify robust biological motifs - universal molecular grammar rules that transcend populations. As they progress upward, L1 regularization helps prune away population-specific noise while retaining true biological variations. However, biological systems introduce their own regularization challenges through inherent noise and uncertainty. Gene expression patterns can vary significantly even within the same population, making regularization crucial not just for preventing overfitting but for distinguishing signal from noise.

However, biological systems introduce their own regularization challenges through inherent noise and uncertainty. Gene expression patterns can vary significantly even within the same population - imagine trying to learn a language where every speaker occasionally speaks random words. Here, regularization becomes crucial not just for preventing overfitting but for distinguishing signal from noise. Early stopping prevents our models from learning spurious patterns in noisy data, while data augmentation helps them understand the natural range of biological variation.

This multilayered challenge represents a new frontier for regularization techniques. Through careful application of both traditional methods and domain-specific constraints, we're teaching AI to speak the universal language of biology while respecting its countless dialects. The result is models that can transfer knowledge from data-rich populations to data-scarce ones, identifying universal biological principles while acknowledging population-specific variations - much like a doctor who combines textbook knowledge with an understanding of how diseases manifest differently across communities.

Learning to Forget

As AI continues to evolve and tackle increasingly complex challenges, our understanding of regularization provides fascinating insights into a fundamental question: how do we build AI systems that can truly learn and adapt like biological intelligence? While current large language models and AI agents can process vast amounts of information, they still struggle with something that comes naturally to biological systems - knowing what to forget.

In this light, regularization isn't just a technical solution to overfitting - it's a glimpse into a deeper truth about intelligence itself. True understanding comes not from perfect recall, but from the ability to extract and retain meaningful patterns while letting go of the unnecessary. Perhaps the next major breakthrough in AI won't come from expanding our models' capacity to remember, but from refining their ability to forget well.

After all, as both neuroscience and computer science are teaching us, intelligence isn't measured by the volume of information retained, but by the wisdom to know which patterns matter. In the end, the future of AI might depend less on expanding our models' capacity to remember, and more on refining their ability to forget - just as nature has been teaching us all along.